DevOps Lab: Run Your Own VPN Server

There are many applications and technologies enabled by VPN. We will focus on one of them in this post: client VPN.

Connecting To A Private Network

If the server you are trying to access is on a private network, you can use a VPN to connect to the network as if you were physically present on the same network. This can be useful if you need to access resources or devices that are only available on the private network.

DevOps Lab: Run Your Own Log Server

syslog

Syslog is a standard for logging system events on Unix and Linux systems. It is typically used to collect and store log messages from various applications and system components, such as the kernel, system libraries, and applications. Syslog uses a client-server model, where each client application sends log messages to a central syslog server, which then stores the messages in a log file. The syslog server can also forward the log messages to other syslog servers or send them to a log management system for further analysis. Syslog uses a simple text-based format for its log messages, which makes it easy to read and analyze. It also supports multiple levels of severity, allowing applications to categorize their log messages based on importance.

DevOps Lab: Run Your Own Monitoring Server

There are many tools and software programs that can be used for monitoring and performance analysis on Linux systems. Some popular options include:

- top - This is a command-line utility that shows real-time information about the processes running on a Linux system, such as their CPU and memory usage.

- htop - This is a more advanced version of top that provides a more user-friendly interface and additional features, such as the ability to sort processes by different metrics and to kill processes.

- sar - This is a command-line utility that collects and displays performance metrics for a Linux system over time. It can be used to analyze CPU, memory, I/O, and network usage, as well as other metrics.

- iostat - This is a command-line utility that shows real-time information about I/O performance on a Linux system. It can be used to monitor the performance of disks and other storage devices.

- vmstat - This is a command-line utility that shows real-time information about various system resources, such as memory, CPU, and I/O. It can be used to monitor the overall health of a Linux system.

- netstat - This is a command-line utility that shows information about network connections on a Linux system. It can be used to monitor the status of network connections and to diagnose networking issues.

There are also many modern monitoring tools and software programs available for Linux, such as Prometheus and Zabbix. These tools typically offer more advanced features and capabilities than the built-in Linux utilities, such as the ability to collect and store metrics over time, and to generate alerts when certain conditions are met.

DevOps Lab: Run Your Own Email Server

To run your own email server using Linux and other open source software, you’ll need to first choose a Linux distribution and install it on your server. I’d go with either Ubuntu, Debian or Rocky Linux. Once you’ve done that, you can choose an email server software that is compatible with Linux, such as Postfix or Exim. After installing and configuring the email server software, you’ll need to set up DNS records and configure authentication and encryption to ensure that your email server is secure. Finally, you’ll need to test your email server to make sure it is working properly and can send and receive messages.

DevOps Lab: Run Your Own Load Balancer

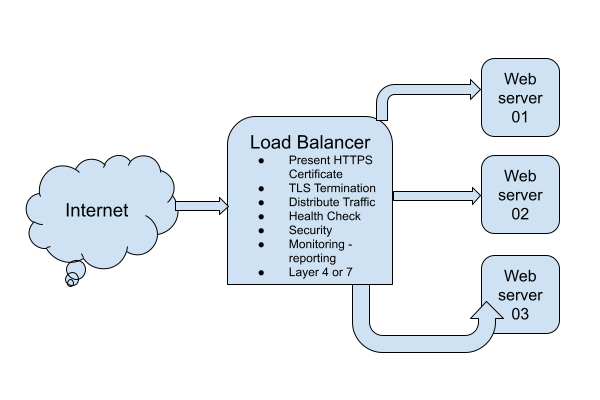

Definition

A load balancer is a type of software or hardware that distributes incoming traffic across multiple servers or resources. This allows the load balancer to distribute the workload evenly, improving the performance and availability of the application.

The Load Balancer Lab

To run your own load balancer using open source software, you will need to:

- Install and configure the load balancer software on a server. Some popular open source options include HAProxy, Nginx, and Envoy.

- Configure the load balancer to distribute incoming traffic to the appropriate servers or resources. This typically involves setting up virtual servers and defining rules for routing traffic.

- Test the load balancer to ensure that it is working correctly and distributing traffic as expected.

- Monitor the load balancer and the underlying servers to ensure that the system is performing well and handling traffic effectively.

- Continually tune and optimize the load balancer configuration to improve performance and ensure that the application is always available and responsive.

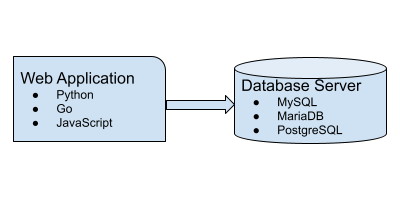

DevOps Lab: Run Your Own Database Server

Your web applications need a solution to store and retrieve its data. A relational database is often used in web applications. MySQL, MariaDB and PostgreSQL are some popular relational databases. There’s also SQLite. Many applications can use any of these relational databases by the virtue of the database layer abstraction. If you are writing your own web application, pick any one database and install it on your web server.

Install The Database

- Install the package.

- Enable and start the database

systemdunit. - Initialize the database server.

- Create databases.

- Create database users and set their passwords.

Connect The Web Application To The Database Server

Breaking Into DevOps: Training Tips

I see a lot of people are trying to break into software engineering and DevOps. There are many inspiring stories out there. People from other industries and verticals have switched to IT and are having a successful career. You can do it too.

Let us look at the resources available to you.

Online Vs. Offline Training And Learning Resources

Online

All you need to break into DevOps is:

DevOps Lab: Run Your Own Web Server

Once upon a time, Apache was the de-facto web server solution. Later, Nginx became popular. If you are getting started with DevOps and Linux system administration, I would recommend you to start with Nginx.

Start With A Static Website

What is a static website? A website made from HTML and CSS. And maybe some JavaScript, images, videos, fonts, etc. The

key takeaway is that there is no server side application involved. Install the Nginx web server on your Linux VM.

Configure it to serve a static website. You will need a static website as a per-requisite. Create a static website by

assembling some HTML, CSS, JavaScript and images. Optionally, add some fonts and videos. Access the website from your

web browser by typing the IP address of the web server in the address bar. Take it to the next level by pointing

the DNS A record of your domain to the VM. For our purposes, a fake domain or a local unregistered domain is

sufficient. Manipulating /etc/hosts is also fine. Enjoy viewing the website from the browser.

Age To Encrypt Secrets

Are you storing secrets such as database credentials, API keys, etc. unencrypted in Git repositories? Stop.

To protect your secrets, do not store them anywhere unencrypted. Especially in Git repositories. Ideally, your organization must have some vault solution where secrets can be stored and securely shared with people on a need-to-know basis. In many small organizations, having such a central secrets management solution is still a luxury. The need to store such secret information in Git repositories is obvious. There are a few ways in which you can encrypt secrets. We discussed using Ansible Vault in one of the previous blog posts.

Kubernetes Objects Required For A Typical Web Application: Part I

From an application developer and Kubernetes user’s point of view, you have to have a working knowledge of Kubernetes. The post outlines the most important Kubernetes objects required to deploy a typical web application. Let us assume that the web application uses the two-tier architecture. We also assume that the cluster is created and administered by an infrastructure or DevOps engineer and the necessary access is provided to the developer to deploy their web application onto the Kubernetes cluster. The Kubernetes operations are performed from the web application developer’s perspective.