Writing A Kubernetes Controller: Part I

By Sudheer S

This is a guide to write a Kubernetes controller. We will kick off by inspecting the Kubernetes API from inside a pod within the cluster. Minikube suffices for this exercise. But you can conduct the exercise to any Kubernetes cluster.

The controller watches events related to Kubernetes pods using the Kubernetes API. When there is a new event, the controller logs the event’s type and the name of the affected pod. This controller can be extended to perform other actions when pod events occur, such as scaling the number of replicas for a deployment, sending notifications, or triggering a custom script or program.

Prerequisites

You should have a working knowledge of:

- Kubernetes

- Minikube

- Python

- Linux command line

We will launch an Ubuntu pod in the cluster. And then we will exec into the container within the pod.

Step 1: Start the Minikube cluster:

minikube start

Before we can begin writing our Kubernetes controller, we need to have a Kubernetes cluster to work with. In this tutorial, we will be using Minikube, which is a tool to run a Kubernetes cluster locally on our laptop or desktop.

To start the Minikube cluster, we will use the minikube start command. This will create a new Kubernetes cluster with a single node, using a default configuration.

Once the Minikube cluster is running, we can use kubectl commands to interact with the Kubernetes API and create

resources such as pods, deployments, and services.

Step 2: Set the kubectl context to the Minikube cluster

kubectl config use-context minikube

Once we have started the Minikube cluster, we need to configure kubectl to use it as the current context.

A context in kubectl is a set of access parameters for a Kubernetes cluster, including the cluster name, the

API server address, and the credentials to authenticate with the API server. By setting the context to the

Minikube cluster, we can use kubectl commands to interact with the API server running in the Minikube cluster.

To set the current context to the Minikube cluster, we will use the kubectl config use-context command. This command

takes the name of the context we want to use as an argument, which in this case is minikube.

kubectl supports multiple contexts, which allows you to switch between different Kubernetes clusters or namespaces

without having to modify the configuration file manually. You can use the kubectl config get-contexts command to

view a list of available contexts, and the kubectl config use-context command to switch between them.

Step 3: Create a service account: sa.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: mycontrollersa

namespace: default

kubectl apply -f sa.yaml

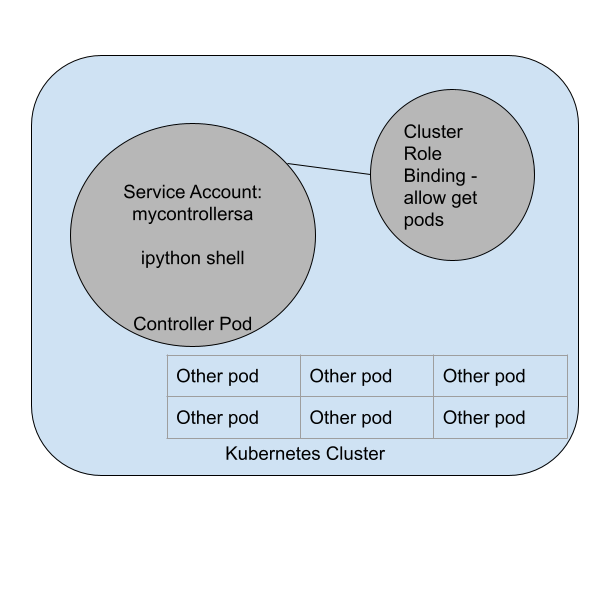

In order to access and interact with the Kubernetes API, we need to create a service account that our controller can use to authenticate with the API server.

A service account in Kubernetes provides an identity and a set of credentials that are used to access the Kubernetes API. Service accounts are associated with namespaces and can have different permissions and roles assigned to them.

To create a service account for our controller, we will create a YAML file called sa.yaml that defines the

service account. In this file, we will specify the apiVersion, kind, and metadata fields for the service

account object.

In this tutorial, we will create a service account called mycontrollersa in the default namespace. This

service account will be used by our controller to authenticate with the Kubernetes API.

Once the sa.yaml file has been created, we can use the kubectl apply command to create the service account

in the Kubernetes cluster.

After creating the service account, we need to grant it the necessary permissions to access the Kubernetes API. This is done by creating a cluster role and cluster role binding that allow the service account to read events related to Kubernetes pods. We will cover this in more detail in Step 4.

By creating a service account for our controller, we can ensure that it has the necessary credentials and permissions to interact with the Kubernetes API and manage resources in the cluster.

Step 4: Create the cluster role and cluster role binding to allow the service account to read the events: rbac.yaml

In this step, we will create a service account, cluster role, and cluster role binding to allow our controller to read events related to Kubernetes pods.

First, we will create a service account called mycontrollersa in the default namespace. This service account will

be used by our controller to authenticate with the Kubernetes API.

Next, we will create a cluster role called my-controller-cluster-role. This cluster role specifies that the service

account can access pods in the cluster and perform actions such as getting, listing, and watching them.

Finally, we will create a cluster role binding that associates the service account with the cluster role. This ensures that the service account has the necessary permissions to access the pods.

After creating the service account, cluster role, and cluster role binding, we will verify that the service

account can get pods in the default namespace and the kube-system namespace using the kubectl auth can-i command.

By following these steps, we can ensure that our controller has the necessary permissions to access the Kubernetes

API and watch events related to pods.

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: my-controller-cluster-role

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: my-controller-cluster-role-binding

subjects:

- kind: ServiceAccount

name: mycontrollersa

namespace: default

roleRef:

kind: ClusterRole

name: my-controller-cluster-role

apiGroup: rbac.authorization.k8s.io

kubectl aply f rbac.yaml

Verify that the service account can get pods in the default namespace and the kube-system namespace:

kubectl auth can-i get pods --as=system:serviceaccount:default:mycontrollersa

kubectl auth can-i get pods --as=system:serviceaccount:default:mycontrollersa -n kube-system

Step 5: Create a test pod with the Ubuntu container image: controller.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: test-pod-ubuntu

spec:

serviceAccountName: mycontrollersa

containers:

- name: test-container

image: ubuntu

command: ['sleep', 'infinity'']

kubectl apply -f controller.yaml

Before we can start writing our Kubernetes controller, we need to have a pod running in the Kubernetes cluster that we can use to test the controller. In this step, we will create a simple test pod that runs an Ubuntu container image.

To create the test pod, we will create a YAML file called controller.yaml that defines the pod. In this file,

we will specify the apiVersion, kind, and metadata fields for the pod object, as well as any other fields that

we want to configure.

In this tutorial, we will create a pod called test-pod-ubuntu with a single container running the Ubuntu image.

We will also specify a service account for the pod called mycontrollersa, which is the service account that we

created in Step 3.

Once the controller.yaml file has been created, use the kubectl apply command to create the pod in the

Kubernetes cluster.

After the pod has been created, use the kubectl get pods command to verify that it is running and to view its status.

By creating a test pod with the Ubuntu container image, we can ensure that our controller is able to watch for events related to pods and perform actions based on those events. We will cover this in more detail in the next steps of the tutorial.

Step 6: Exec into the pod

kubectl exec -it test-pod-ubuntu -- bash

In order to run our Python code inside the Kubernetes cluster and interact with the Kubernetes API, we need to exec into the test pod that we created in Step 5.

kubectl exec is a command that allows us to run a command inside a running container in a pod. By using kubectl exec,

we can access the shell inside the container and run commands or scripts just as if we were running them on our local

machine.

To exec into the test pod, we will use the kubectl exec command with the name of the pod (test-pod-ubuntu) and

the name of the container (test-container). This will start an interactive shell inside the container,

allowing us to run commands and scripts.

Once we have exec'd into the pod, we can install the necessary packages and launch the Python interpreter to

start writing our Kubernetes controller. We will cover this in more detail in the next steps of the tutorial.

By exec'ing into the test pod, we can ensure that our Python code is running inside the Kubernetes cluster and

has access to the Kubernetes API. This is necessary for our controller to watch for events related to pods

and perform actions based on those events.

The further steps will be executed in the shell.

Step 7: Update the pacakges via apt and install the Python venv package

apt update

apt install python3-venv

Before we can start writing our Kubernetes controller in Python, we need to make sure that the necessary packages and dependencies are installed inside the test pod.

In this step, we will use the apt package manager to update the packages in the Ubuntu container and install the

python3-venv package, which is a Python package that provides support for creating virtual environments.

Updating the packages via apt ensures that we have the latest versions of all the packages installed in the test pod.

Installing the python3-venv package is necessary for creating a Python virtual environment, which provides an

isolated Python environment for our controller to run in.

After updating the packages and installing the necessary dependencies, we can create a Python virtual environment for our controller to run in. We will cover this in the next step of the tutorial.

By updating the packages and installing the necessary dependencies, we can ensure that our controller has access to all the required packages and dependencies and can run without any issues.

Step 8: Create a Python virtualenv

python3 -m venv env

To ensure that our Kubernetes controller runs in an isolated Python environment and doesn’t interfere with other Python packages installed in the test pod, we will create a Python virtual environment.

A Python virtual environment is a self-contained directory tree that contains a Python installation and all the necessary packages and dependencies for our controller to run. This allows us to create a separate environment for our controller that doesn’t affect the rest of the system.

To create a Python virtual environment, we will use the python3 command with the -m venv option,

followed by the name of the directory where we want to create the virtual environment. In this tutorial,

we will create a virtual environment called env in the home directory.

Activate the Python virtualenv

# activate

source ./env/bin/activate

After creating the virtual environment, we can activate it to start using it.

By creating a Python virtual environment, we can ensure that our controller runs in an isolated environment and doesn’t interfere with other Python packages installed in the test pod. This is important for maintaining a clean and consistent development environment.

Step 9: Python packages

pip install kubernetes ipython

After creating the Python virtual environment, we need to install the necessary Python packages for our Kubernetes controller.

In this tutorial, we will use the kubernetes and ipython packages, which provide Python bindings for the

Kubernetes API and an enhanced interactive Python shell, respectively.

To install the necessary packages, we will use the pip package manager, which is a Python package installer.

Step 10: Launch the Python interpreter

ipython3

The further steps will be executed inside the ipython Python shell.

Step 11: Python

from kubernetes import client, config, watch

config.load_incluster_config() # load config

# Create Kubernetes API client

v1 = client.CoreV1Api()

# Watch for changes to pods

w = watch.Watch()

for event in w.stream(v1.list_pod_for_all_namespaces):

print(f"Event: {event['type']} {event['object'].metadata.name}")

As pods come and go, you should see the Python program printing the events.

Here is a sample output:

Event: ADDED hello-task-run-pod

Event: ADDED kube-apiserver-minikube

Event: ADDED kube-controller-manager-minikube

Event: ADDED kube-proxy-8vv4c

Event: ADDED my-cluster-zookeeper-0

Event: ADDED coredns-565d847f94-z4fl7

Event: ADDED etcd-minikube

Event: ADDED storage-provisioner

Event: ADDED argocd-application-controller-0

Event: ADDED argocd-notifications-controller-566bc99494-bvhpp

Event: ADDED my-cluster-kafka-0

Event: ADDED tekton-pipelines-controller-5b8bd8d4c7-zk8sc

Event: ADDED argocd-repo-server-bc9c646dc-l944l

Event: ADDED test-pod-ubuntu

Event: ADDED strimzi-cluster-operator-8684bccc55-z8vx4

Event: ADDED kube-scheduler-minikube

Event: ADDED tekton-pipelines-remote-resolvers-c9dcd8c86-m6wdr

In the next part, we will package this Python code into an application and a container. We will create the container image, push it to a registry and then install it.

References

- Learning Linux For Devops

- Kubernetes Objects Required For A Typical Web Application: Part I

- Kubernetes Objects Required For A Typical Web Application: Part II